2024

Concrete Crack Detection and Image Generation Using Machine Learning

In this project, I developed a machine learning pipeline to generate high-quality (512x512) cracked concrete images, aiming to minimize GPU costs. I utilized a dataset of concrete images in various resolutions up to 256x256. To address the challenge of limited labeled data, I implemented data augmentation and developed a Self-Supervised Learning (SSL) model to distinguish between cracked and not cracked images. The SSL took advantage of the excess unlabeled data via a pretext task to help the model learn useful feature representations about the data before transferring the model weights to a classification model and training it on what labelled data there was. I finally used that model to classify all remaining unlabeled data. Next I built a diffusion model built a diffusion model trained on the cracked images to generate images of size 128x128. I finally trained a Generative Adversarial Network (GAN) to generate high-resolution images, focusing on producing realistic crack patterns, training it to up-res images from 32x32 to 64x64 to 128x128. At this point, I could feed the 128x128 images from the diffusion model through the GAN to up-res them to a size of 512x512.

https://colab.research.google.com/drive/1IuYWwM2iVEApv5kJEx_2kyYlTOLb6ZXb?usp=sharing

Voice-Activated System with Machine Learning for Smart Control (Sensing systems)

In this project, I developed a voice activation system using machine learning to control an RGB LED and display the temperature based on user commands. The system is designed to recognize the voice commands "RED", "Green", "Yellow", and "Temperature". When the user speaks a command, the system activates an RGB LED to display the corresponding color or reads the current temperature and shows it on a 4-digit LED display. Data for the voice commands is collected by recording 20 samples per command. These samples are then preprocessed, and machine learning models are trained to classify the commands. After evaluating multiple models, a K-Nearest Neighbors (KNN) classifier is selected based on its performance metrics (precision, recall, F1 score, and accuracy). The system is integrated with the trained model, allowing real-time classification of voice commands and system response, including triggering the appropriate LED color or displaying the temperature.

Confusion matrices comparing model performance

Machine Learning Material Classification and Real-Time Prediction System (Sensing Systems)

This project developed a system that classifies materials—paper, foil, and a water bottle—based on light intensity readings from a photocell sensor. The system captures data by positioning each material over the sensor and initiating data collection with a push button. The collected data is then processed and used to train machine learning models, which are evaluated based on metrics such as precision, recall, F1 score, and ROC curves. The best-performing model is deployed for real-time classification, where it processes live sensor data to predict the material type. The classification result is displayed on a 4-digit LED screen, with an audible signal indicating the outcome. This project demonstrates how sensor data can be combined with machine learning techniques to deliver real-time predictions for material classification.

Automated Pet Hydration and Feeding System (Sensing Systems)

This project aimed to design a low-cost automated system to monitor pet hydration and manage feeding. Using a Raspberry Pi Pico, capacitive touch sensors, water level sensors, and a vibratory motor feeder, the system alerts users when the water level is low with an LED indicator and dispenses food only when the pet interacts with the touch sensor. Key lessons learned include the challenge of training pets to interact consistently with the touch sensor and issues with food dispensing accuracy, which could be improved with a different mechanism. A more robust notification system would also enhance user interaction.

2023

Autonomous Navigation Using ROS2 (robotics)

In this robotics project, I applied my knowledge of robot autonomy to develop a differential drive robot in ROS2 that could successfully navigate maze-like environments. I designed and implemented mapping, planning, and control nodes, using tools like Gazebo and RViz. My work involved creating and troubleshooting callbacks for real-time map updates, developing path-planning algorithms, and tuning PID controllers for smooth waypoint navigation. Through this project developed my skills in ROS2, sensor integration, debugging complex systems, and optimizing robot performance in dynamic scenarios. The accompanying video showcases the culmination of my learning and efforts, demonstrating the robot's autonomous navigation capabilities in challenging maze-like environments.

Lacuna Masakhane Parts of Speech Classification Challenge: NLP for Low-Resource Languages (Deep neural networks)

This project highlights my work in natural language processing (NLP), where I participated in the Lacuna Masakhane Parts of Speech Classification Challenge. The competition tasked us with building a machine learning solution capable of accurately classifying 17 parts of speech for the Luo and Setswana languages—two low-resource African languages. One of the primary challenges was the lack of ground truth data for these target languages, making model evaluation difficult. Despite this, we leveraged transfer learning with pre-trained models like Afro-xlmr and experimented with hyperparameter tuning to improve our model's performance. We also focused on optimizing the use of hardware resources, utilizing GPUs and high-RAM machines to handle the large-scale models. While our best score was 34%, this project deepened my understanding of NLP challenges, model fine-tuning, and the importance of domain-specific models, such as those tailored for African languages. This experience has been invaluable in refining my technical and problem-solving skills in the field of deep learning.

2022

Flybrix Quadcopter Control and Sensor Fusion (UAV Systems and Contorl)

Working with the Flybrix quadcopter hardware provided practical experience in UAV control and sensor fusion. I designed and implemented nested PID control loops, derived PIR controller equations, and tuned them for stable operation. Using a test stand, I collected and analyzed step response data for roll and pitch, enhancing my understanding of system dynamics. Additionally, I implemented and tuned an Attitude and Heading Reference System (AHRS) based on an onboard IMU, deriving Kalman Filter equations and adjusting parameters to optimize performance. This project deepened my skills in control system design, sensor integration, and debugging real-world hardware, preparing me for more advanced UAV applications.

Pitch command and step response

PID loop and equivalent PIR

Quadcopter Simulation and Closed-Loop Control Design (UAV Systems and Control)

This project gave me hands-on experience in designing and analyzing closed-loop control systems for a quadcopter simulation. I worked on deriving transfer functions, implementing autopilot algorithms, and comparing analytical models with numerical simulations to ensure accuracy. I developed skills in tuning controllers to achieve stable performance under real-world conditions, such as handling winds and sensor biases, and analyzing system responses using MATLAB. The process also improved my understanding of state estimation, system linearization, and troubleshooting complex simulation environments, providing a solid foundation for designing reliable UAV control systems.

Optimal Control and Simulation: A Spacecraft Trajectory Design Project

In this project, I applied the principles of optimal control and reinforcement learning to model and optimize a spacecraft's trajectory in the lunar gravitational field. By implementing nonlinear equations of motion in MATLAB and solving them through direct transcription, I designed a control strategy to minimize the time required to reach a target orbital radius. The optimization process incorporated boundary conditions, dynamic constraints, and control limits, with stochastic variability introduced via a random spacecraft mass. I validated the analytical results by simulating the trajectory in Simulink, refining my understanding of numerical optimization and dynamic behavior analysis. Through this project, I developed skills in translating mathematical models into computational frameworks, tuning parameters for convergence, and interpreting the effects of uncertainties on control strategies. The class deepened my understanding of advanced topics in optimal control, such as stochastic systems, numerical gradient computation, and reinforcement learning techniques, while enhancing my ability to integrate theory with practical simulations in MATLAB, Simulink, and other computational environments.

2021

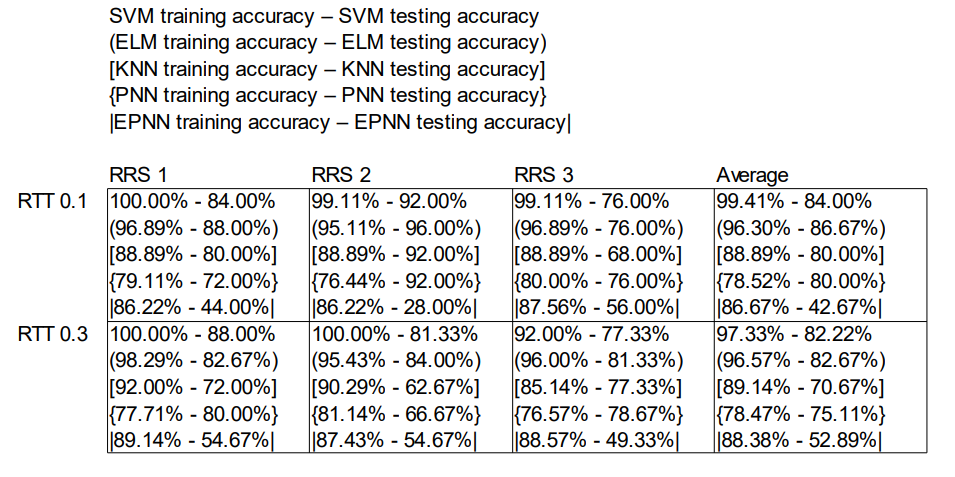

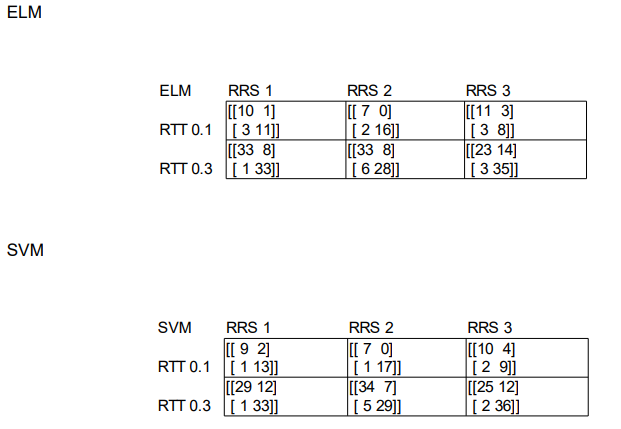

Wall following move classification (Applied Machine Learning)

A course project in Python which classifies the a movement of a wall following robot based on 24 ultrasound sensor readings read in from a repository. The program runs through 5 separate supervised machine learning techniques (ELM, SVM, KNN, PNN, and EPNN) to determine most accurate method of classifying moves. I also analyzed the results with confusion matrices, to see if any of the inputs were often being misclassified.

2013

Battleship

My first ever project was a recreation of Battleship. Programmed in C++, I used a game software development kit called Dark GDK for visualization and mouse operations. This was also my first attempt of implementing object oriented programming practices in my code.

Networked Robotic Systems Laboratory (NRSL)

During undergrad, volunteered at NRSL and outfitted Roombas with BeagleBoards and integrated them with sonar sensors. I also installed a Linux distribution, on SD cards for use in the BeagleBoards.